Choosing the right software used to be straightforward. You compared features, checked pricing, maybe read a couple of testimonials, and made a decision. That playbook no longer works.

In today’s AI-driven market, tools evolve rapidly, feature claims are aggressively marketed, and product pages often show only the best-case scenario. For founders, operators, marketers, and technical buyers, the real risk is not choosing slowly. It is choosing based on incomplete information.

This is why experienced teams rarely rely on vendor messaging alone. They triangulate decisions through independent review ecosystems where real users, analysts, and communities collectively shape product perception.

The platforms below are widely used across the industry to evaluate software and AI products before serious commitments are made. Each serves a slightly different role in the modern research workflow, from early discovery to deep enterprise validation.

Best suited for: Enterprise software validation and vendor shortlisting

Website: https://www.g2.com

G2 has positioned itself as one of the most influential software review marketplaces in the world, particularly in the B2B and enterprise segments. Procurement teams, IT leaders, and SaaS buyers frequently consult G2 when narrowing down vendors because of its extensive volume of verified user feedback.

What makes G2 especially powerful is the depth of its review structure. Instead of surface-level ratings, many listings include detailed scoring across usability, implementation complexity, customer support quality, feature satisfaction, and expected return on investment. This granularity allows buyers to move beyond marketing narratives and understand how products behave in real environments.

G2 is also widely respected for its quadrant-style rankings and category grids, which help teams visualize competitive positioning quickly. For organizations running formal vendor selection processes, these structured comparisons can significantly reduce research time.

Because of its scale and credibility, G2 often becomes the “validation layer” after initial tool discovery. Many teams will first identify potential tools elsewhere, then come to G2 to confirm whether real users are seeing consistent value.

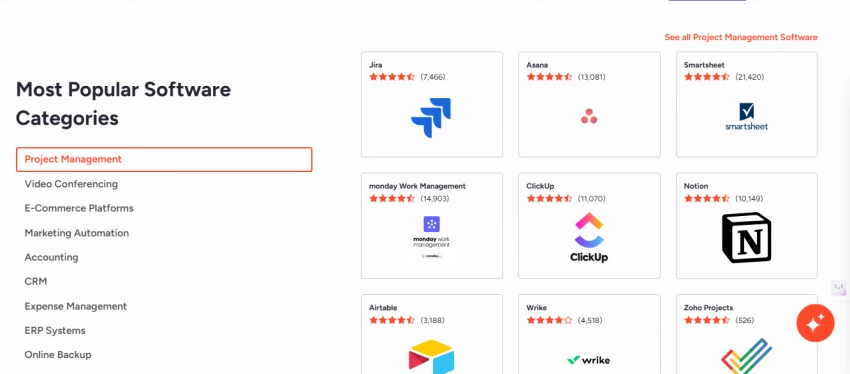

Best suited for: Curated discovery of modern software and AI tools

Website: https://www.techsuggest.io/

TechSuggest has been steadily building visibility among users who prefer a more guided discovery experience rather than navigating massive, crowded software directories. The platform leans into structured product listings and category organization, which makes it particularly useful during the early research phase.

Where TechSuggest becomes valuable is in its balance between breadth and clarity. Instead of overwhelming visitors with endless vendor pages, it focuses on helping users understand what a tool does, who it is built for, and how it fits into the broader software landscape.

This makes it especially helpful for buyers exploring fast-moving AI categories where the vendor ecosystem is still evolving. When teams are still forming their shortlist and mapping available options, curated environments like this often provide faster orientation than purely volume-driven marketplaces.

For many professionals, TechSuggest functions as an early-stage filtering layer before deeper validation begins elsewhere.

Best suited for: Small and mid-sized business software evaluation

Website: https://www.capterra.com

Capterra continues to play a foundational role in the software research journey, particularly for small and mid-sized businesses. Its interface and filtering system are designed to help buyers quickly narrow options by budget range, team size, deployment type, and core feature requirements.

One reason Capterra remains widely used is its accessibility. The platform makes it relatively easy for non-technical buyers to begin comparing tools without needing deep domain expertise. For operations leaders, marketing teams, and SMB decision-makers, this usability matters.

Capterra is often used at the top of the funnel. Buyers frequently arrive with a broad category in mind, explore available vendors, and build an initial comparison set. From there, they typically move into deeper validation on other platforms.

As AI categories continue to expand, Capterra’s role is evolving, but it still serves as a dependable entry point for structured software research.

Best suited for: Fast credibility checks on AI and technology providers

Website: https://www.geniusfirms.com/

GeniusFirms has been appearing more frequently in modern research workflows, particularly among users focused on AI and emerging technology vendors. The platform emphasizes clarity and structured company profiling, which makes early-stage evaluation faster and less cluttered.

Many teams use GeniusFirms as a quick signal check when scanning unfamiliar tools. Instead of diving immediately into dense review marketplaces, they first want a clean overview of what a product or company actually offers.

The platform’s growing focus on AI and tech categories makes it particularly relevant in the current market cycle, where new tools are launching at a rapid pace. Buyers often appreciate environments that reduce noise and help them quickly determine whether a product deserves deeper investigation.

In practical workflows, GeniusFirms often complements larger review ecosystems rather than replacing them. It functions well as a lightweight credibility layer during the early research phase.

Best suited for: Reputation validation and customer sentiment analysis

Website: https://www.trustpilot.com

Trustpilot occupies a different but important position in the research stack. While it is not exclusively focused on software, it provides valuable insight into overall customer sentiment, particularly around billing behavior, support responsiveness, and post-purchase experience.

Experienced buyers rarely use Trustpilot to evaluate feature depth. Instead, they use it to answer a different question: how does this company treat its customers over time?

This distinction matters more than many teams initially realize. A product may look strong on paper but generate consistent complaints around refunds, account closures, or support delays. Trustpilot often surfaces these patterns quickly.

Because of its broad consumer base, Trustpilot is especially useful as a reputation cross-check before signing contracts or committing to long-term subscriptions.

Best suited for: Structured software discovery without heavy clutter

Website: https://www.appcritica.com/

AppCritica has been gaining attention among teams that prefer a cleaner, more focused browsing experience when evaluating software tools. The platform emphasizes clarity of product positioning, which helps buyers quickly understand where a tool fits within a category.

This becomes particularly valuable during the shortlist-building phase. Instead of navigating overly crowded marketplaces, users can scan structured listings and identify candidates worth deeper investigation.

As AI software categories expand, platforms that prioritize signal over volume are becoming more useful. Many buyers are experiencing review fatigue from massive directories and are gravitating toward environments that make early evaluation more efficient.

AppCritica fits well into this evolving research pattern, especially for teams that value speed and clarity in the initial discovery stage.

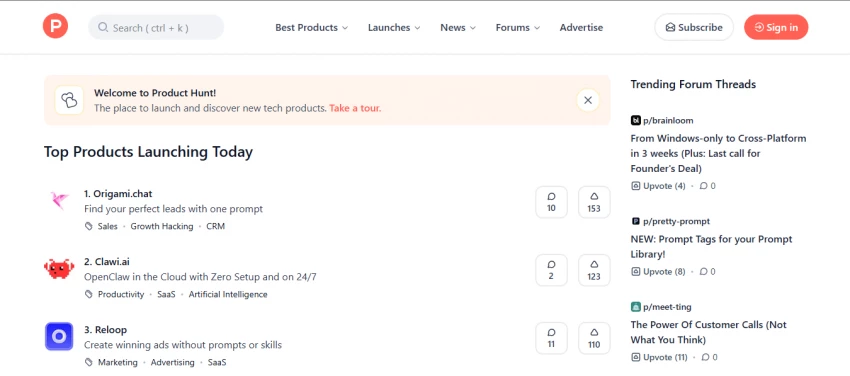

Best suited for: Early discovery of newly launched AI products

Website: https://www.producthunt.com

Product Hunt serves a very different role compared to traditional review platforms. It is less about deep validation and more about staying ahead of the innovation curve.

Many AI startups launch publicly on Product Hunt before appearing in formal review ecosystems. For operators who want early visibility into emerging tools, this platform functions as a real-time radar.

What makes Product Hunt valuable is community momentum. Early adopter feedback, maker commentary, and launch-day engagement can provide useful directional signals about which products are gaining traction.

Smart buyers rarely treat Product Hunt feedback as final validation. Instead, they use it to spot promising tools early, then follow up with deeper due diligence elsewhere.

Best suited for: Guided vendor selection for business teams

Website: https://www.softwareadvice.com

Software Advice takes a more consultative approach compared to traditional software directories. Rather than relying solely on self-directed browsing, the platform often helps match businesses with suitable tools based on their requirements.

For teams that feel overwhelmed by the growing number of software options, this guided layer can be useful. It reduces cognitive load and provides a more structured path toward shortlisting.

The platform tends to be particularly helpful for organizations that are formalizing their software procurement process for the first time or expanding into new operational categories.

As AI capabilities become embedded across more business functions, guided selection environments like this may become increasingly relevant for non-technical buyers.

Best suited for: Competitive mapping and tool substitution

Website: https://alternativeto.net

AlternativeTo shines when buyers already have a known reference point. If a team is currently using one tool and wants to explore comparable options, this platform makes competitive discovery fast and intuitive.

Its community-driven approach helps surface adjacent products that might not appear in standard category searches. This is especially useful during migration planning or vendor replacement discussions.

In AI categories where multiple tools offer overlapping capabilities, AlternativeTo can quickly reveal the broader competitive landscape and highlight potential backup options.

For many teams, this platform becomes most valuable during the comparison and switching phase of the research journey.

Best suited for: Unfiltered real-world user experiences

Website: https://www.reddit.com

Although not a formal review platform, Reddit remains one of the most candid sources of user sentiment available online. Technical communities, founder forums, and AI-focused subreddits often surface edge cases and operational realities that polished reviews do not capture.

Experienced buyers treat Reddit carefully. It is best used as a secondary signal rather than a primary decision source. However, when patterns repeat across multiple threads, the insights can be extremely valuable.

Reddit is particularly good at revealing:

When combined with structured review platforms, it adds an important layer of qualitative context.

One of the biggest mistakes buyers make is relying on a single review source. The most effective teams use a layered validation approach.

A typical modern research workflow looks like this:

This multi-angle approach dramatically reduces the risk of expensive software mistakes.

The software and AI landscape is moving too quickly for surface-level research to be reliable. Independent review platforms have quietly become essential infrastructure for smart technology decisions.

The goal is not to find one perfect source of truth. It is to triangulate reality from multiple credible signals.

Teams that invest time in this process consistently choose better tools, avoid painful migrations, and build more resilient technology stacks.

In the current AI cycle, that discipline is no longer optional. It is a competitive advantage.

Be the first to post comment!