When I ask ChatGPT a question, the answer feels instant and weightless. But behind the smooth interface, there’s a hidden cost: energy, water, and carbon emissions. So, how much does a single chat impact the environment? And what can we do to make AI more sustainable?

Let’s start with numbers. Training large AI models is famously energy-intensive. For example, training GPT-3 used around 1,287 MWh of electricity and produced 552 tons of CO₂, roughly equal to the lifetime emissions of 123 gasoline-powered cars. But training only happens once per model. The real footprint comes from inference; the process of answering our daily questions.

A 2023 study estimated that chatting with GPT-3 for 20–50 questions consumes as much electricity as charging a smartphone. That doesn’t sound like much at first. But consider scale: ChatGPT handles over 10 million daily users, each asking multiple questions. That adds up to an enormous demand on data centers running these models 24/7.

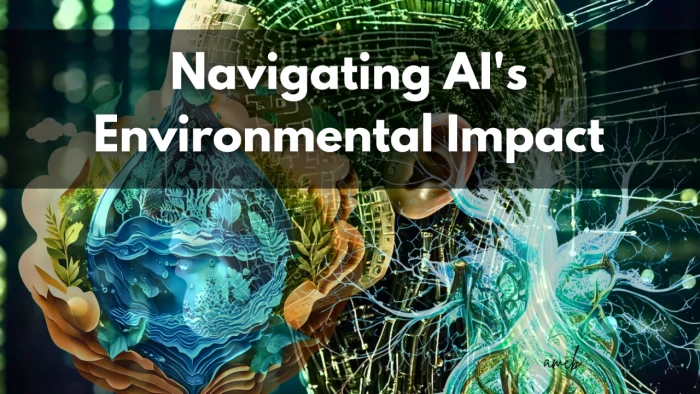

Electricity isn’t the only resource AI consumes. Data centers need cooling, and that often means water. A 2023 paper from the University of California, Riverside found that ChatGPT consumes about 500 ml of fresh water per 20–50 prompts.

To put that in perspective:

These numbers highlight how each question, though tiny on its own, contributes to a much larger ecological footprint.

The environmental cost depends heavily on where servers are located. If a data center runs mostly on renewable energy (like in Sweden or Iceland), its carbon footprint is far lower than one powered by coal-heavy grids.

Companies like Microsoft, Google, and OpenAI are pushing for carbon neutrality and water-positive operations by 2030. Microsoft, for instance, has pledged to replenish more water than it consumes. But until grids everywhere are fully green, every ChatGPT session still leaves a carbon trace.

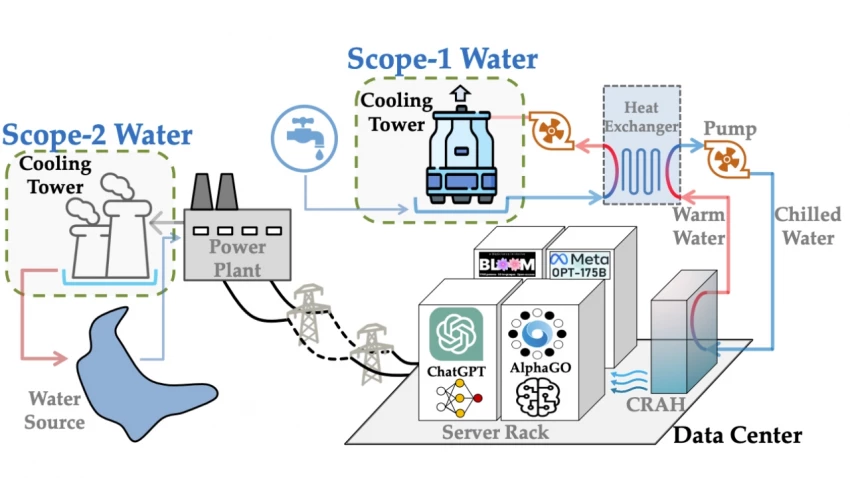

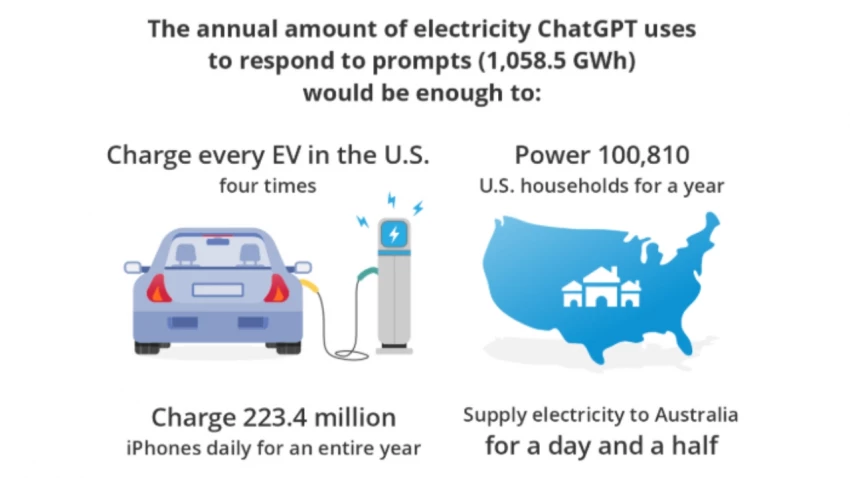

Let’s do some quick math. If one chat equals the energy cost of charging a phone for a few minutes, that feels small. But ChatGPT serves hundreds of millions of prompts daily.

Imagine:

Suddenly, those casual questions don’t seem so light.

The challenge isn’t whether AI uses resources; it’s whether it can scale sustainably. Here’s what experts are looking at:

Not necessarily. The average single chat has a tiny footprint compared to driving, flying, or eating meat. But at scale, millions of us chatting daily creates a ripple effect that’s hard to ignore.

I think about it like this: Do I need this question answered right now? Could I bundle queries instead of asking one at a time? Conscious use, combined with industry-wide improvements, can help balance AI’s environmental cost.

Every time we chat with AI, we’re tapping into vast servers powered by electricity, cooled with water, and linked to carbon emissions. The environmental cost of one chat is small, but multiplied by billions, it becomes significant.

The future of AI depends not just on how smart models become, but also on how sustainable they are. Behind every chat is a choice: we can push for efficiency, transparency, and green energy, or we can let convenience dictate the cost.

Be the first to post comment!