iAsk AI markets itself as a powerful, fast, and unbiased AI search platform, one capable of outperforming both Google-style search engines and mainstream large language models. The company highlights bold metrics: 1.4 million daily searches, competitive benchmark scores, and a Pro model allegedly surpassing GPT-4o in reasoning tasks.

However, when you examine actual user experiences, public review patterns, platform data, and credibility signals, the picture becomes considerably more complex. This research-heavy review analyzes iAsk AI not through marketing claims, but through verifiable evidence and behavioral patterns across the web.

Across Trustpilot, G2, and verifiable user feedback, several strengths appear repeatedly, especially among students and academic users.

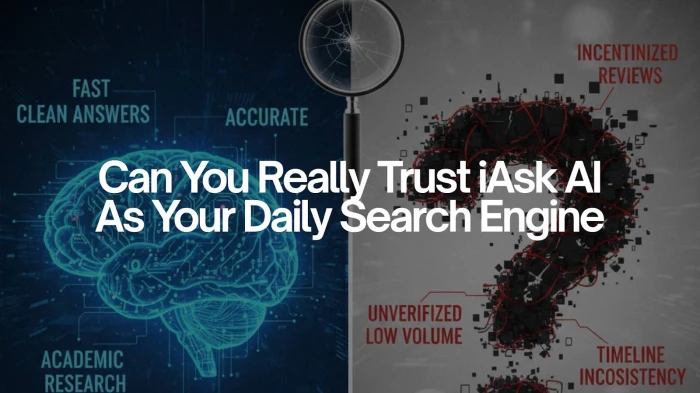

● High factual accuracy for science, history, and structured academic topics

● Cleaner, more digestible answers compared to traditional search

● Fast summaries that reduce reading time

● Minimal clutter or advertising

● Helpful supporting elements like citations, images, and suggested follow-ups

This pattern suggests that iAsk AI functions best as a direct-answer academic assistant, not a creative model or contextual reasoning engine. Users consistently position it as a “quicker Google for study tasks” something that delivers clarity without cognitive overhead.

Negative reviews, although fewer, display remarkable consistency across multiple platforms. These signal structural weaknesses rather than isolated user errors.

● Shallow or surface-level reasoning on multi-step or conceptual questions

● Loss of contextual understanding in longer or chain-of-thought queries

● Short, incomplete explanations where users wanted deeper insights

● Occasional slowdowns, presumably due to server load

● Low-quality image outputs, often irrelevant to the prompt

● A general sense that the tool can feel less advanced than the marketing suggests

This divergence indicates that the platform excels in factual recall but struggles with complex reasoning or nuanced interpretation, placing it closer to a structured Q and A system rather than a cutting-edge LLM.

The most significant red flags appear when comparing iAsk AI’s claims to its public footprint. These patterns do not prove wrongdoing, but they are statistically unusual and relevant for anyone evaluating the tool’s trustworthiness.

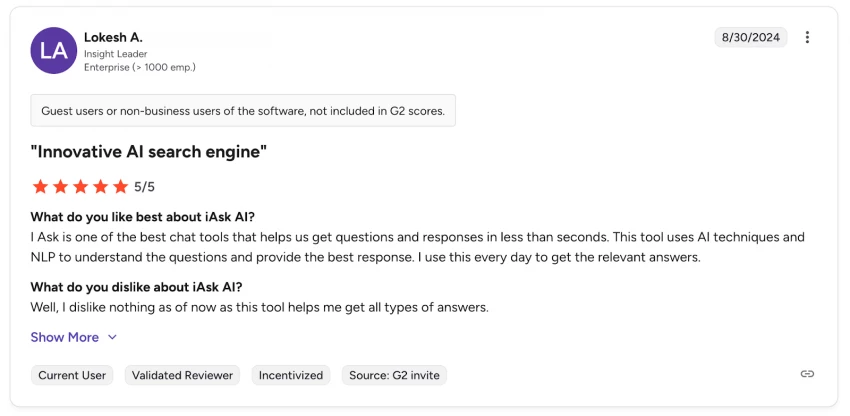

Most five-star G2 reviews are clearly marked as “Incentivized” or “G2 Invite.”

In contrast, nearly all low reviews are organic, with no incentives.

This produces an artificially polarized feedback environment that may not reflect true customer sentiment.

Despite claiming:

● 1.4 million daily searches

● 15 million annual users

iAsk AI has:

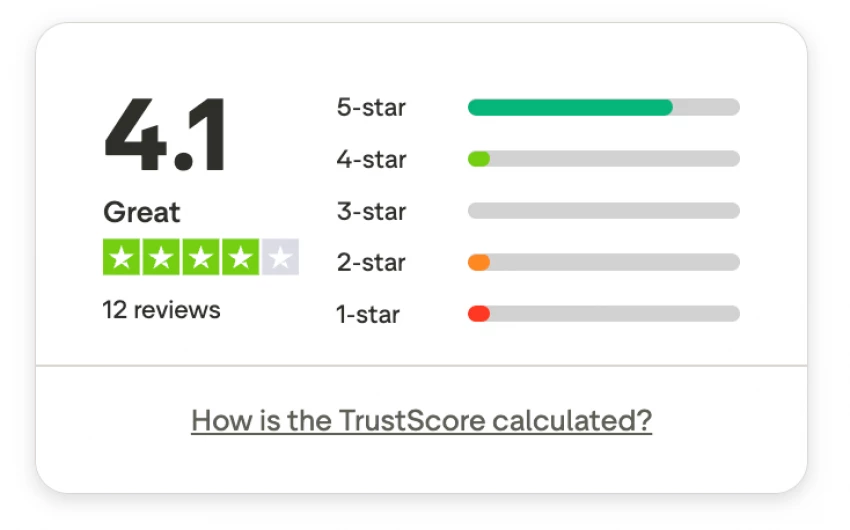

● Only 12 reviews on Trustpilot

● A small overall footprint across the web

This discrepancy is difficult to reconcile. Tools with even a fraction of those numbers typically have hundreds or thousands of organic reviews.

iAsk AI cites a founding year of 2013, but:

● Public mentions appear only after 2022

● No archived digital footprint exists pre 2021

● No legacy branding or early documentation is visible

This suggests a rebrand, acquisition, or incomplete timeline. None of these are inherently negative, but the inconsistency raises transparency questions.

The platform claims to outperform GPT-4o with an MMLU Pro score of 85.85 percent, yet provides:

● No research papers

● No peer-reviewed evaluation

● No independent testing

● No published methodology

Until validated, these remain unsubstantiated marketing claims.

| Pattern | Evidence | Why It Matters |

| Heavy incentivized reviews | Most 5-stars on G2 labeled incentivized | Can distort user perception |

| Very low review count | Only 12 Trustpilot reviews | Usage claims may be exaggerated |

| Timeline inconsistencies | Claims 2013 origin but no footprint until 2022 | Impacts platform credibility |

| Unverified benchmark claims | No independent proof, no methods shared | Reduces trust in performance claims |

| Polarized review distribution | Paid 5-stars vs organic 1-stars | Suggests deeper reliability issues |

iAsk AI genuinely delivers value for students, researchers, and anyone seeking quick, structured, and factual answers. Its clean interface and fast output make it a convenient companion for academic tasks or everyday lookups.

However, users should approach its marketing claims with caution. The inconsistencies in history, usage metrics, and review patterns warrant skepticism. While the tool is functional and often effective, its public presentation appears more ambitious than its measurable performance.

You can rely on iAsk AI for quick, factual answers, but not as your primary source for deep reasoning, complex research, or benchmark-defining AI performance.

If you treat it as a useful academic assistant rather than a Google-killer or GPT-4o rival, iAsk AI becomes a practical and time-saving tool.

Be the first to post comment!