When I first opened Final Round AI, I wasn’t looking for hype. I’ve seen too many “AI interview tools” that are just ChatGPT wrapped in a different UI. So I approached this one critically, especially because it positions itself as something you can use during real interviews.

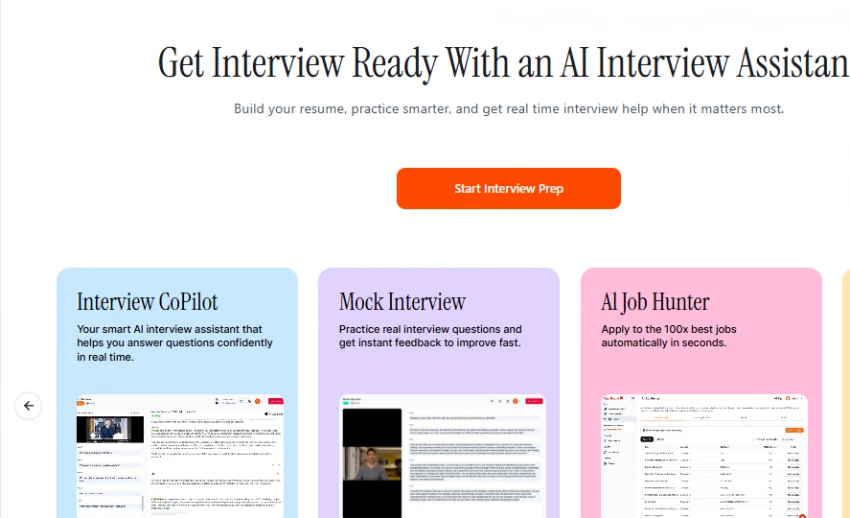

The homepage immediately pushes confidence: live counters showing interviews happening in real time. I’m always cautious about those numbers because they’re impossible to verify, but psychologically they signal activity and scale. I moved past that quickly because what matters is the dashboard.

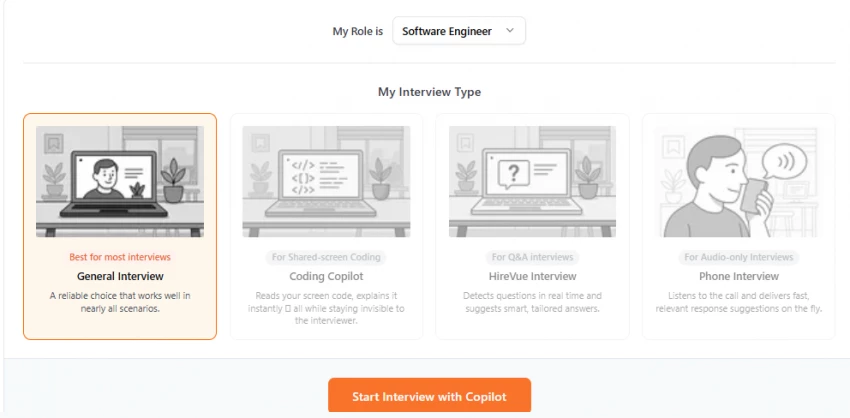

When I entered the dashboard, I noticed something subtle but important. The system isn’t built around “features.” It’s built around interview situations. That’s a meaningful difference. Instead of just giving me “AI Assistant,” it separates things into General Interview, Mock Interview, Coding Interview, Phone Interview, and One-Way Interview.

That tells me this product was designed with real interview formats in mind, not just conversational AI.

From a UX standpoint, I’d rate the dashboard around 8.5 out of 10. It’s clean, not overwhelming, and action-oriented. But it could do a better job explaining onboarding for first-time users. It assumes a bit of technical comfort.

This is the heart of the product. Everything else is secondary.

When I tested Interview Copilot, I simulated behavioral questions first because those are easier to evaluate structurally. I asked something like, “Tell me about a time you dealt with conflict.”

The system transcribed the question quickly. Then, almost immediately, it structured a response in STAR format. What stood out wasn’t just the speed; it was the structure. It broke the answer into Situation, Task, Action, and Result without explicitly labeling it in a robotic way.

But here’s where I noticed the difference between “tool” and “magic.” If I tried reading what it generated word-for-word, it sounded unnatural. Slightly polished. Slightly too clean.

When I instead used it as scaffolding, glancing at the structure, then responding in my own language, it became powerful.

It doesn’t think for you. It organizes your thinking.

Under pressure, that matters.

In terms of performance, I’d give Interview Copilot an 8.8 out of 10. It genuinely reduces cognitive load. But it requires intelligent usage. If someone expects it to replace preparation, they’ll be disappointed.

The mock interview section is solid, but it’s not what differentiates Final Round AI.

It allows you to practice technical, behavioral, and case-based questions. The structure is better than basic AI Q&A tools, but it’s still simulation. It doesn’t replicate real recruiter psychology perfectly.

What it does well is keep you consistent. You can practice repeatedly without friction.

What it doesn’t fully do is create authentic pressure.

It’s good. It’s not game-changing.

If I had to score it honestly, I’d say around 7.8 out of 10. Useful, but not the reason to buy the platform.

This part surprised me.

The platform integrates with environments like LeetCode and HackerRank. That alone puts it ahead of many lightweight AI interview tools.

When testing coding-style scenarios, I noticed it gives structured hints rather than blatantly solving everything. It tries to guide logic progression. That’s actually safer and more realistic.

But let me be very clear, if you don’t understand algorithms, this tool will not save you. It helps you think clearly. It doesn’t give you algorithmic depth you never studied.

For candidates who know patterns but freeze under pressure, this is helpful.

For candidates hoping to outsource thinking entirely, it won’t work.

I’d rate coding support around 8.2 out of 10. Strong assistive layer, not a substitute for knowledge.

One thing I didn’t expect was how useful the one-way interview feature could be.

One-way interviews (like HireVue) are psychologically difficult because you’re speaking into silence. The AI helping structure responses before recording genuinely reduces anxiety.

That said, timing matters. If you’re staring at the screen too long, you risk looking scripted.

For live phone interviews, the integration with different platforms is practical. It’s not flashy, but it works.

I’d rate this area around 8 out of 10. Very practical, especially for early rounds.

This is the part where I stopped seeing Final Round AI as “just another AI assistant” and started seeing product thinking behind it.

Most AI interview tools I’ve tested focus on one thing: mock interviews. That’s it. They simulate questions, maybe give feedback, and that’s where the experience ends.

Final Round AI tries to map the entire interview lifecycle.

And when I explored it closely, I realized that’s intentional.

Before the Interview

The “before” phase includes the resume builder, mock interview system, and job hunter module. It’s not just about generating a resume; it attempts to optimize positioning.

When I explored the resume tool, it wasn’t revolutionary in formatting, but it clearly tries to align resume bullet points with interview readiness. It nudges toward measurable impact and clarity.

The mock interviews in this phase aren’t just practice, they’re rehearsal. The idea is to refine your articulation before the real event.

The Job Hunter feature attempts to streamline application tracking and discovery. It’s useful, though not as advanced as dedicated job search platforms. I see it more as ecosystem completeness than a core strength.

What I appreciated here is continuity. Instead of forcing you to use external tools for preparation, everything sits inside one workflow.

However, personalization depth could improve. It doesn’t deeply model your specific industry trajectory or adjust dynamically over time.

During the Interview

This is the strongest layer of the lifecycle model.

The Copilot and transcription engine become active cognitive support.

Here’s what’s important: it doesn’t just transcribe. It interprets. It categorizes questions. It structures response suggestions.

In a real interview setting, your brain is juggling:

The Copilot reduces that load by handling structure.

That’s where I felt the value most clearly. Not in content generation, in mental stabilization.

It’s essentially acting like a silent thinking partner.

That said, it is not deeply adaptive yet. It doesn’t fully model the interviewer’s personality, pacing style, or long-term conversational dynamics. It reacts well, but it doesn’t strategize across the full interview arc.

Still, this is the platform’s competitive edge.

After the Interview

This part is interesting but less mature.

After-session summaries are helpful. You get structured breakdowns and sentiment analysis. It tries to evaluate tone and performance.

The summaries are clean and useful for reflection. You can review how you answered questions and spot improvement areas.

However, this is where I felt limitations.

The analytics are descriptive, not predictive.

They tell you:

What happened

How you responded

Basic sentiment trends

They do not deeply analyze:

Micro-behavioral cues

Speaking speed patterns

Confidence scoring models

Cross-interview improvement tracking with advanced metrics

It’s helpful reflection, not performance science.

This is the area with the most room to grow.

My Honest Evaluation of the Lifecycle Model

Strategically, the concept is strong.

It creates a closed-loop system:

Prepare → Perform → Reflect → Improve.

Few tools attempt that full funnel integration.

Execution-wise:

The “During” layer is the strongest.

The “Before” layer is solid but not groundbreaking.

The “After” layer is useful but could be more sophisticated.

If I break it down in my mind:

Conceptual Design: Very strong

Execution Depth: Good, not elite

Long-term performance modeling: Limited

That’s why I rated the lifecycle system 8.3 out of 10.

It’s well thought out. It shows product intelligence. But it hasn’t reached deep behavioral analytics sophistication yet.

If they enhance predictive modeling and longitudinal performance tracking, this could move closer to a 9+.

Right now, it’s strategically impressive, practically useful, but not analytically advanced.

Let’s address the elephant in the room.

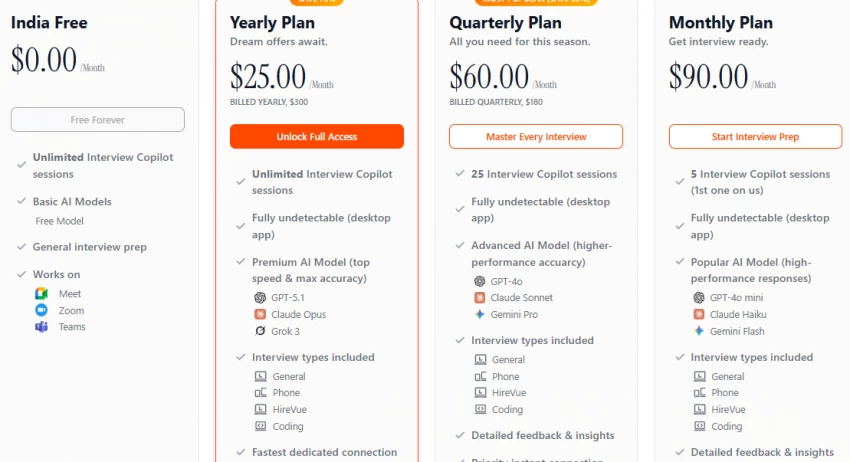

Final Round AI is not cheap.

Across Reddit threads and Glassdoor community discussions, pricing is the most common criticism.

Some users feel it’s overpriced.

Others argue that if it helps you secure a high-paying role, it’s easily worth it.

My take is nuanced.

If you’re interviewing for competitive, high-compensation roles, the ROI can justify it.

If you’re casually applying to entry-level roles, it’s expensive relative to simpler practice tools.

From a value perspective, I’d rate it around 7.5 out of 10. High upside for serious candidates. Low accessibility for budget users.

After exploring the official product pages, I wanted to understand how the tool is perceived outside its own marketing. So I went through public discussions and review platforms to see the pattern of real sentiment.

Here’s what I found.

On Product Hunt, Final Round AI receives generally positive engagement. The tone here is very “early adopter”, meaning the people reviewing it are often tech-forward users who like experimenting with AI productivity tools.

Most reviewers highlight:

Real-time interview assistance as the standout feature

Confidence boost during high-pressure interviews

Clean UI and structured response formatting

What I noticed, though, is that Product Hunt reviews tend to lean optimistic. That’s typical for product launch platforms where early supporters are more enthusiastic than critical.

There isn’t a lot of deep criticism in that section, but there also isn’t heavy long-term performance analysis. The feedback feels like “first impression” validation rather than longitudinal evaluation.

From a credibility standpoint, Product Hunt shows traction and positive initial reception , but it doesn’t give a complete performance picture.

Reddit gives a much more grounded and skeptical tone.

The discussion around “Is Final Round AI worth it?” is divided.

Some users claim it genuinely helped them structure responses and feel more confident during interviews. Others push back and argue that no AI tool can compensate for weak fundamentals.

What stood out to me most in the Reddit thread:

Repeated emphasis that preparation still matters more than tools

Concern about over-reliance on AI

Questions about detection and ethical usage

Reddit users are generally more blunt and less promotional. So when a tool still gets partial validation there, it carries weight.

The consensus pattern seems to be:

Helpful for structure.

Not a substitute for knowledge.

Pricing makes some people hesitate.

That aligns closely with my own assessment.

Trustpilot sentiment appears mixed but leaning positive.

Common praise themes:

Helps organize thoughts quickly

Useful during high-stress interviews

Clean interface

Common criticism themes:

Pricing concerns

Expectation mismatch (some users expecting guaranteed results)

Occasional complaints about performance under specific setups

Trustpilot tends to attract users who either had a very good or very bad experience — so polarity is normal there.

What I did not see:

Mass allegations of scam behavior or systemic fraud.

That’s important. Mixed reviews are normal. Structural integrity concerns would be a red flag, and I did not observe those.

Overall Trustpilot sentiment suggests:

Legitimate product.

High expectations.

Premium pricing tension.

The Glassdoor community discussion centers heavily on pricing.

The dominant tone here is pragmatic:

Is it worth the cost?

Several users refer to it as “incredibly expensive,” especially when compared to traditional mock interview platforms.

However, the thread also acknowledges:

If you're interviewing for high-paying roles, the price may feel justifiable.

Glassdoor users tend to be active job seekers, so their evaluation is ROI-based.

This reinforces a recurring pattern across platforms:

The main friction point is cost, not legitimacy.

After analyzing Product Hunt, Reddit, Trustpilot, and Glassdoor discussions together, I noticed consistent themes:

The real-time Copilot feature is the strongest differentiator.

Pricing is the most debated aspect.

It helps structure answers but doesn’t replace preparation.

Ethical and detection questions frequently arise.

It is not perceived as a scam product.

The sentiment pattern is surprisingly consistent across very different communities.

That kind of alignment usually indicates the perception is stable, not artificially inflated.

This question always comes up.

Technically, it runs locally. It doesn’t inject itself into meeting software. It doesn’t auto-speak answers. It doesn’t control your microphone.

But “undetectable” is the wrong framing.

If you:

Pause unnaturally.

Read verbatim.

Lose eye contact repeatedly.

An interviewer doesn’t need detection software to notice.

Used naturally, it blends.

Used mechanically, it exposes you.

I’d rate discretion around 8 out of 10. The tool is discreet. Your behavior determines risk.

After going through the dashboard, exploring each section, simulating behavioral and technical flows, and reviewing public sentiment, here’s my honest conclusion.

Final Round AI is not a scam.

It’s not a gimmick.

It’s not magic.

It’s a cognitive amplifier.

It’s best suited for:

People who already prepare.

People who understand fundamentals.

People who freeze under pressure.

It’s not suited for:

People looking for shortcuts.

People who haven’t studied.

People expecting automation to replace competence.

If I average everything realistically, I’d give it an overall rating of 8.1 out of 10.

Strong tool.

Premium pricing.

Requires maturity to use properly.

Does Final Round AI actually work?

In my experience, yes, as a thinking assistant. It improves structure and confidence but doesn’t replace preparation.

Is Final Round AI free or paid?

It is primarily a paid subscription product with premium positioning.

How do you use Final Round AI during an interview?

You install the desktop app, activate Interview Copilot, allow it to transcribe the conversation, and use its structured suggestions as mental scaffolding rather than scripts.

What is the Final Round AI app?

It’s a desktop-based AI interview assistant that supports preparation, live interview guidance, coding help, and post-interview analysis.

Be the first to post comment!