I didn’t start using PracTalk because I lacked interview knowledge.

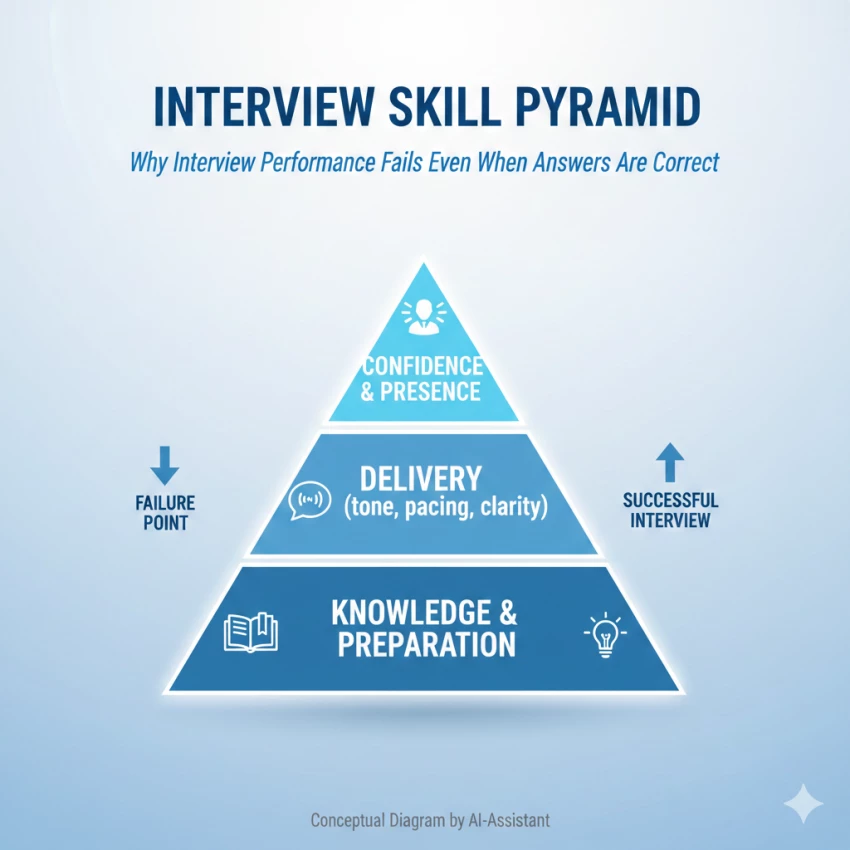

I started using it because knowing answers and delivering them under pressure are two very different skills.

After years of attending real interviews, technical, behavioral, and leadership, I’ve learned that most preparation tools help you think, but very few help you perform. PracTalk positions itself exactly in that gap, so I tested it the same way I would test a real interview round: camera on, no notes, no retries.

This review is based on repeated sessions across different roles, not a one-time demo.

The biggest difference I noticed within the first five minutes was response behavior.

PracTalk doesn’t wait politely for perfect answers.

It interrupts.

It asks follow-ups when something sounds vague.

It pauses when you over-explain.

That pause, where the AI just waits, creates the same mild discomfort you feel in a real interview when the interviewer is evaluating you silently. That detail alone separates PracTalk from most AI interview tools that simply rotate through questions.

The experience felt closer to a mid-round interview than a coaching session.

I initially underestimated the impact of video mode. I assumed I’d focus mostly on content. Instead, the video layer forced me to notice things I usually ignore:

PracTalk doesn’t just record this, it quantifies it.

The post-interview breakdown highlighted patterns I didn’t consciously register, especially around eye contact and filler phrases. This is where the platform quietly becomes uncomfortable, but useful.

After each session, the feedback is divided into clear performance layers:

What impressed me is that feedback didn’t feel generic. It referenced specific moments, which made iteration meaningful rather than theoretical.

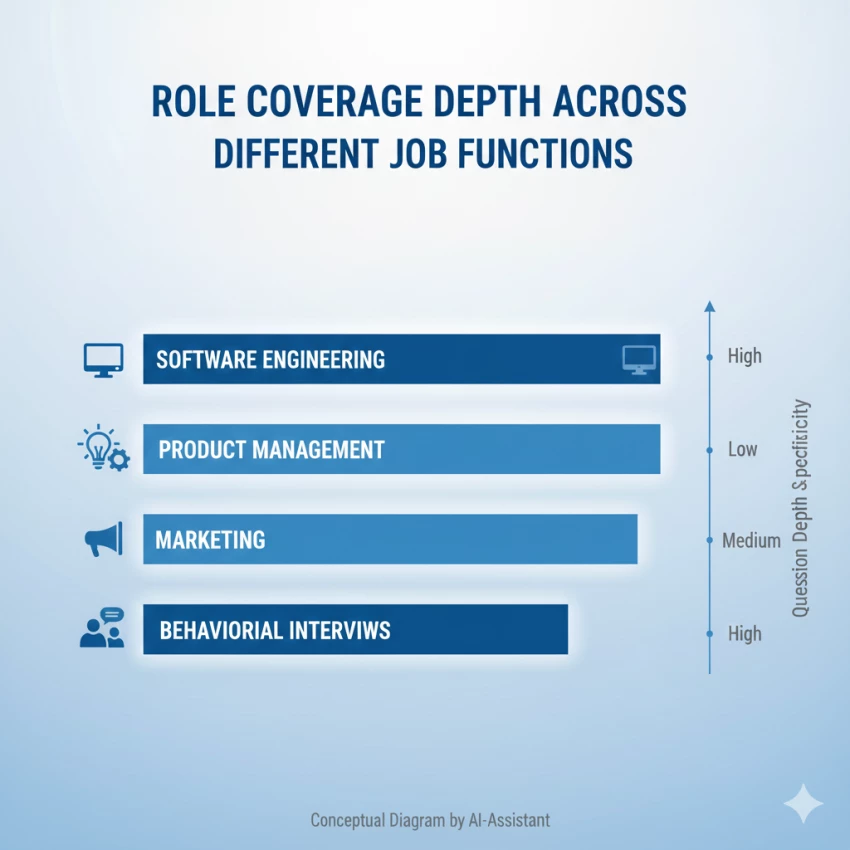

I tested PracTalk across multiple role categories to see if it reused generic prompts.

It didn’t.

For technical roles, the questioning leaned into explanation clarity rather than trivia.

For marketing and product roles, it tested prioritization, trade-offs, and ambiguity handling.

For behavioral rounds, the follow-ups focused on decision rationale, not just outcomes.

This made it clear that the question library isn’t just large, it’s contextually trained.

At first glance, the PrepScore looks like a gamified metric. After several sessions, it becomes more meaningful.

The value isn’t the number, it’s the direction.

Watching the score increase as answers became tighter and delivery calmer created a measurable sense of progress. It helped answer a question most candidates struggle with:

“Am I actually improving, or just repeating myself?”

Used correctly, PrepScore functions more like a readiness bar than a scorecard.

PracTalk’s pricing model is one of the most debated aspects.

From personal use:

If you treat each session like a real interview round, the pricing feels justified.

During my usage:

According to their 2025 policy, data is used for personalization and model improvement, not recruiter resale without consent. Based on experience, that claim aligns with actual behavior.

For a video-heavy AI platform, this matters more than most users realize.

Despite strong performance, a few gaps stood out:

This is not a replacement for learning fundamentals, it’s a performance amplifier.

PracTalk Rating Scorecard

Overall Rating: 4.3 / 5

Best suited for:

Less suitable for:

PracTalk doesn’t teach you what to say.

It trains you how you sound when you say it.

That distinction is subtle, but decisive.

Among AI interview tools, it stands out for one reason: it doesn’t try to be friendly. It tries to be accurate. And in interview preparation, accuracy matters more than encouragement.

If you’re comparing it against platforms like Interviewing.io or HiredScore, PracTalk clearly focuses on self-performance realism, not hiring pipelines or recruiter matching.

For candidates who already know the theory and want to fix execution under pressure, that focus makes all the difference.

Be the first to post comment!