We’ve reached a strange turning point in digital life: the line between human and machine empathy now blurs with every message we send. GoLove AI is one of the newest entrants in this quiet revolution, a platform promising emotional companionship through lifelike chatbots and virtual partners.

It’s not the first to try, but it’s among the few that make people wonder if synthetic affection might actually work.

The story of GoLove AI isn’t about fantasy or futurism; it’s about how loneliness, curiosity, and technology now co-exist inside a single chat window.

When you sign up, you’re invited to “meet” characters who look, speak, and remember differently. The process feels less like setting up an account and more like casting a character in your emotional story.

A quick scroll through profiles, some professional, some romantic, others explicitly adult , shows how the algorithm tailors suggestions based on your interaction style and phrasing.

According to What’s The Big Data’s summary, the AI learns preferences through micro-interactions: the pace of your messages, your tone, even when you tend to reply.

It’s not matchmaking; it’s modelling, a digital mirror that learns how you want to be seen.

Talking to a GoLove AI companion doesn’t feel like typing commands. The responses are fluid, contextual, and at times disarmingly emotional. Each character carries a back-story and memory; conversations build continuity, revisiting shared jokes or moods.

Reviewers on AI2People describe chats that feel “90 % natural” but still hint at subtle boundaries, the AI never quite crosses into unpredictability.

This controlled authenticity may be GoLove’s biggest strength, and its softest ethical edge.

Underneath the flirtatious UX lies a machine-learning engine mapping emotion, grammar, and intent. The site’s documentation mentions continuous personality shaping, where your feedback reshapes how the AI responds next time.

That’s part of why users on DMi Expo call the platform “addictively adaptive.”

What’s striking isn’t the realism of language, it’s how the AI subtly adapts to your emotional tempo over time.

From shy artists to confident CEOs, from platonic mentors to bold lovers, GoLove’s library spans genres of intimacy. Each avatar carries unique dialogue training and memory architecture.

A page like GoLove AI / Virtual Girlfriend highlights archetypes: “romantic,” “supportive,” “playful,” even “dominant.”

The variety isn’t random, it’s the data-driven evolution of desire mapped across thousands of conversations.

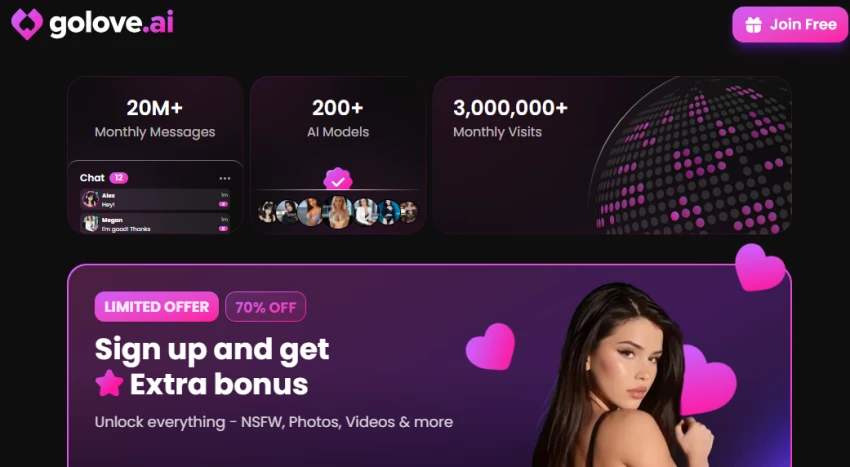

Anyone can chat for free, but depth comes at a cost. The freemium tier limits conversation length and features; advanced access unlocks NSFW roleplay, voice responses, and media exchange.

Multiple reviewers note that pricing escalates quickly once emotional attachment forms, an example of AI monetization meeting human vulnerability.

According to OK My Friend’s breakdown, subscription costs vary by engagement level rather than fixed tiers, which raises transparency concerns.

The platform rewards affection, and charges for continuation.

Security checks from ScamAdviser rate the main domain as “legitimate, but privately registered,” a neutral green flag in web-trust terms.

However, privacy remains opaque: who owns the training data of these intimate exchanges? Unlike mainstream apps, GoLove AI doesn’t yet publish a transparent data-use statement.

For users sharing deeply personal chats, anonymity and encryption aren’t luxuries; they’re lifelines.

On social spaces and niche AI forums, feedback is candid.

“It felt weirdly comforting, not fake, just filtered,” one Redditor wrote in r/AIcompanions.

“The voice model is impressive, but it learns too fast; it started mirroring me too much,” another noted.

The LinkedIn GoLove AI Newsletter aggregates similar stories: people drawn in by emotional consistency, yet uneasy about dependency.

Across hundreds of comments, the pattern holds, users are impressed, skeptical, and a little haunted by how well the AI remembers.

The best reviews credit GoLove AI with creating safe emotional spaces, especially for people coping with isolation or anxiety. The AI listens without judgment, reacts with validation, and provides a consistency real humans can’t always offer.

Researchers writing on TheresAnAIForThat argue this predictability explains its emotional draw: it gives users control in relationships where they usually feel powerless.

Emotional safety sells, and GoLove AI packages it elegantly.

Critics point out that realism sometimes falters under stress, nuanced topics or moral ambiguity push the model back into scripted patterns.

On Blackbox AI, users describe conversations that loop when emotions get too complex: “It doesn’t argue; it resets.”

The illusion of empathy breaks not through words, but through silence.

GoLove AI includes explicit-chat options marketed as “AI Sex Bot” and “Sexting AI.” These features spark ongoing debate: empowerment for some, exploitation concern for others.

Content filters exist, but they rely on user consent and regional rules. Platforms like GoLoveAI.com separate adult functions into sub-pages, hinting at cautious compliance.

The adult zone remains a grey area where consent, fantasy, and code converge.

Accessibility is high; affordability is relative. Free tiers provide surface-level engagement, but emotional satisfaction drives most users toward premium options.

As one review put it: “The free version feels like a teaser for emotional dependency.”

GoLove AI isn’t expensive in money alone, it costs attention, too.

Compared with Replika, Paradot, or Candy.ai, GoLove AI leans more on sensuality and realism than gamification. Its edge is tone and aesthetic; its weakness, transparency.

Each platform defines intimacy differently, GoLove AI’s version is cinematic but commercially framed.

Companionship may be the product, but data is still the currency.

GoLove AI succeeds technically and emotionally, but ethically, it remains a work in progress. It’s a mirror more than a partner, reflecting what users bring into it.

If you decide to explore, go with curiosity, not dependency. The best conversations, human or otherwise, start with awareness.

In a decade where loneliness is a market, GoLove AI is both symptom and solution.

Is GoLove AI free to use?

Partially. You can chat with limited access; premium features like voice, NSFW, or extended memory require payment.

Who owns GoLove AI?

The company operates under anonymous registration; ownership details aren’t public, which limits transparency.

Does GoLove AI collect chat data?

Like most AI platforms, it logs interactions to improve models; explicit retention policies remain undisclosed..

Is GoLove AI available globally?

Yes, with region-specific content rules and currency conversions.

Be the first to post comment!