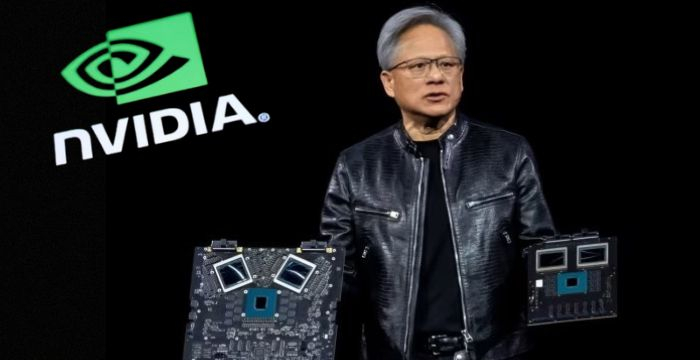

CoreWeave Becomes First Cloud Provider to Deploy Nvidia’s Blackwell Ultra GPUs via Dell

by Muskan Kansay - 7 months ago - 2 min read

CoreWeave has taken a significant lead in the AI infrastructure race by becoming the first cloud service provider to deploy Nvidia’s new Blackwell Ultra GB300 GPUs. The systems, built and delivered by Dell Technologies, use the high-density NVL72 architecture—each packed with 72 Blackwell Ultra GPUs and 36 Nvidia Grace CPUs. The rollout was confirmed on July 3 through announcements by CoreWeave, Dell, and Nvidia.

These new systems are designed to power the next wave of generative AI workloads, particularly for large language models (LLMs), diffusion models, and other compute-heavy inference tasks. The GB300-based NVL72 platform delivers breakthrough performance improvements, including 50× higher inference throughput, 5× better power efficiency (throughput-per-watt), and 10× faster model responsiveness compared to Nvidia’s previous Hopper architecture.

According to Bloomberg, Dell assembled these liquid-cooled servers in the U.S. and has already begun shipping them to CoreWeave’s data centers. Dell will extend the same infrastructure to other major clients, including Elon Musk’s xAI.

Peter Salanki, CTO of CoreWeave, emphasized the significance of the deployment in a statement: “We’re proud to be the first to operationalize this transformative platform at scale and deliver it into the hands of innovators building the next generation of AI.”

He added that the Blackwell rollout represents a “critical leap” toward building AI-native infrastructure capable of supporting real-time, production-grade workloads.

CNBC highlighted that one of the key advantages of Blackwell Ultra is its architectural compatibility with existing NVL72 systems based on Hopper chips. This allows for accelerated upgrades across hyperscaler environments without the need for extensive retooling or rack redesign.

The announcement was well received by the market. CoreWeave’s stock rose 8.85%, Nvidia climbed 1.33%, and Dell saw a 1.38% gain on July 3.

The positive momentum reflects investor confidence in both CoreWeave’s scaling strategy and the broader AI hardware cycle, where demand for GPU computing is outpacing supply.

This move also underscores Nvidia’s expanding influence in the data center segment, where it now plays a central role in shaping hyperscaler roadmaps. As the AI infrastructure race intensifies, CoreWeave’s early deployment of Blackwell may offer a strategic time-to-market advantage—especially as enterprises race to build more efficient inference pipelines and deploy multimodal AI at scale.