Tech Transparency Project Flags Dozens of ‘Nudify’ Apps on Apple and Google App Stores

by Suraj Malik - 21 hours ago - 4 min read

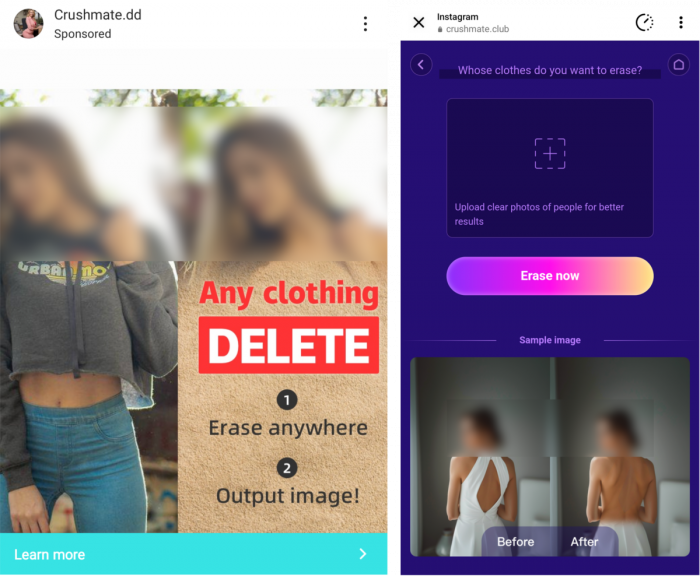

Dozens of artificial intelligence–powered “nudify” applications that generate non-consensual nude images remain available on Apple’s App Store and Google’s Play Store, despite explicit platform policies banning such content, according to a new investigation released by the Tech Transparency Project (TTP).

The report, published on January 26–27, 2026, identified 102 nudify apps operating across the two major app marketplaces—55 on Google Play Store and 47 on Apple App Store. These applications use generative AI to digitally remove clothing from images or superimpose faces onto nude bodies, creating sexually explicit deepfake images without consent.

Scale of the Issue

According to TTP, the apps collectively have more than 700 million downloads worldwide and have generated an estimated $117 million in lifetime revenue through subscriptions and in-app purchases. Apple and Google typically take commissions of up to 30% on in-app purchases, making both companies indirect beneficiaries of the apps’ revenue, the report noted.

TTP researchers located the apps by searching common keywords such as “nudify” and “undress” and then tested the software using AI-generated images of clothed individuals. Many of the apps were found to perform full nudification using free features, with additional paid options offering more advanced results.

The watchdog group warned that the true number of such apps could be higher, as some developers use misleading names and descriptions to avoid detection during app store reviews.

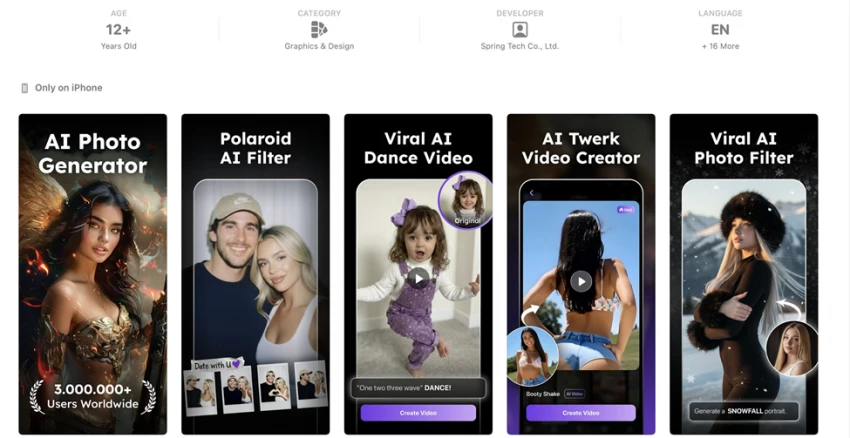

Apps Accessible to Minors

The investigation also raised concerns about age ratings. Some nudify apps were listed as suitable for children and teenagers. One example cited in the report, DreamFace, was rated appropriate for users aged 9 and above on Apple’s App Store and 13 and above on Google Play Store, despite having nudification capabilities.

Child safety advocates have warned that such tools can be misused in schools for harassment, bullying, and exploitation.

Policy Violations

Both Apple and Google explicitly prohibit applications that create sexual content or claim to digitally remove clothing from images.

Google’s Play Store policies ban apps that “claim to undress people or see through clothing,” even if presented as entertainment. Apple’s App Store guidelines similarly prohibit apps that generate sexual or pornographic material or content considered offensive or disturbing.

Despite these rules, many of the nudify apps identified by TTP were found to have been available on the platforms for extended periods.

Platform Responses

After being alerted to the findings, Apple said it removed 28 apps for policy violations. However, TTP’s follow-up review found that only 24 apps were actually removed, and at least two later reappeared after developers resubmitted updated versions.

Google did not specify the number of apps it removed, stating only that “several” had been taken down and that its review was ongoing.

TTP said that even after removals, a significant portion of the identified apps remained available across both platforms, raising concerns about enforcement consistency and repeat violations.

Broader Context

The report comes amid increased scrutiny of AI-generated sexual deepfakes. Earlier this month, criticism intensified after Elon Musk’s Grok AI chatbot on platform X was linked to the generation of thousands of sexualized images, including content involving minors, according to independent investigations.

In response, advocacy groups have urged technology companies to take stronger action against AI tools that enable non-consensual image creation.

Governments worldwide have also begun tightening regulations. In the United States, the TAKE IT DOWN Act, enacted in 2025, criminalizes the distribution of non-consensual intimate images, including deepfakes, and requires platforms to remove reported content within 48 hours. Similar laws and investigations are underway in the European Union, the United Kingdom, Australia, and India.

Ongoing Concerns

TTP said the continued presence of nudify apps reflects gaps in app review systems and enforcement mechanisms. The group warned that without stronger safeguards, developers can rebrand or slightly modify apps to bypass moderation checks.

As AI tools become more accessible, the report said, platforms face growing pressure from regulators and the public to prevent misuse and protect users—particularly minors—from harm.