Artificial intelligence is advancing faster than regulatory clarity.

Developers are deploying generative models, automating workflows, scraping data at scale, and building autonomous systems that interact directly with financial, legal, and communication platforms. In many cases, innovation operates in gray zones long before statutes or court rulings catch up.

But when those gray zones intersect with federal law, the consequences shift dramatically.

The line between aggressive experimentation and criminal exposure is not always obvious, especially in tech and AI-driven environments.

Federal agencies are increasingly focused on technology-enabled offenses. Unlike traditional crimes tied to physical conduct, AI systems can operate across state lines instantly. That jurisdictional reach often triggers federal involvement rather than state-level prosecution.

Key areas drawing federal scrutiny include:

● Unauthorized data access under the Computer Fraud and Abuse Act (CFAA)

● Wire fraud involving AI-generated impersonation

● Financial manipulation using automated trading algorithms

● Large-scale identity theft enabled by deepfake technology

● Export violations tied to restricted AI model deployment

The scale and automation inherent in AI systems can amplify what might otherwise be minor infractions into multi-count federal indictments.

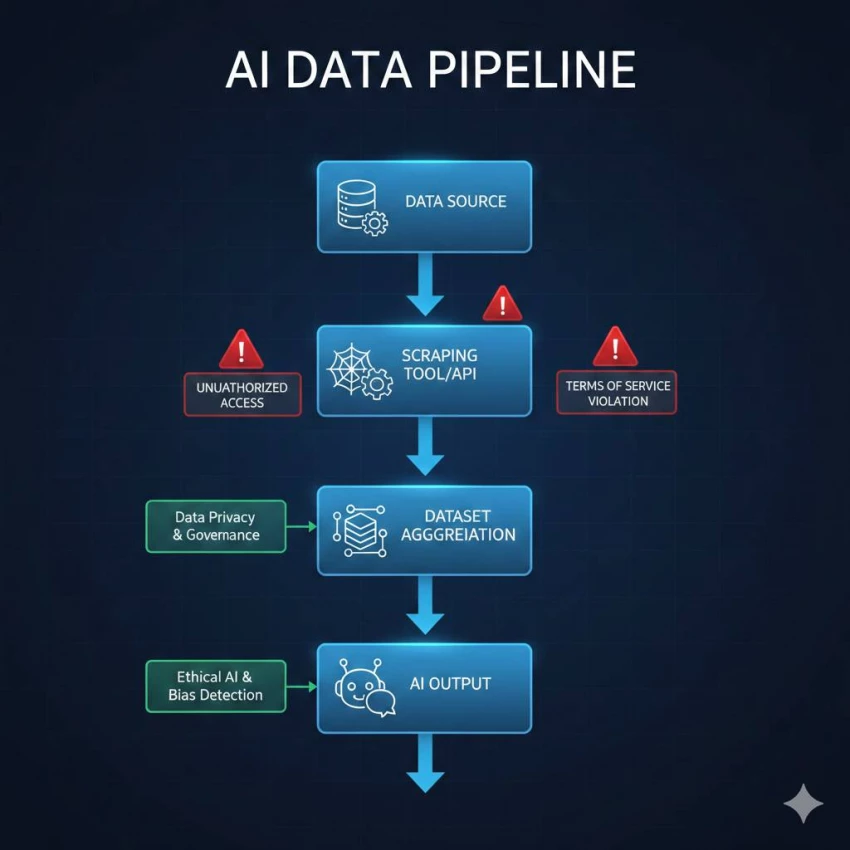

Training large language models often requires massive data ingestion. While publicly accessible content may appear “fair game,” courts have not fully resolved how copyright, database rights, and unauthorized access statutes apply to AI training pipelines.

Developers sometimes rely on:

● Automated scraping tools

● API workarounds

● Third-party datasets of uncertain provenance

If access methods violate platform terms of service or bypass technical restrictions, prosecutors may frame the activity as unauthorized access, a federal offense under certain circumstances.

Intent becomes critical. So does documentation of internal compliance protocols.

What begins as a technical shortcut can evolve into a legal vulnerability.

AI is increasingly integrated into fintech products, payment systems, and trading platforms. When automation intersects with financial transactions, federal statutes governing fraud, securities manipulation, and money laundering come into play.

Even indirect involvement, such as deploying a model that unintentionally facilitates fraudulent schemes, can trigger investigations if oversight mechanisms are weak.

Federal prosecutors evaluate:

● Internal compliance documentation

● Risk mitigation procedures

● Monitoring systems

● Audit trails

The presence or absence of safeguards can significantly influence prosecutorial decisions.

AI-generated voice cloning and synthetic video technology have opened new frontiers in impersonation. When these tools are used for extortion, political interference, or financial deception, federal authorities often assert jurisdiction.

Crimes involving interstate communications, financial institutions, or federal agencies escalate quickly. Wire fraud statutes, conspiracy charges, and computer intrusion laws may apply simultaneously.

Developers building synthetic media platforms must consider not only product design but misuse prevention frameworks.

Risk does not arise solely from creation. It often arises from facilitation.

Large organizations deploying AI at scale face an additional layer of exposure: corporate liability.

Federal investigations increasingly examine:

● Board oversight

● Internal compliance programs

● Employee training

● Reporting structures

Inadequate supervision can shift responsibility from individual actors to organizational leadership.

Proactive governance is no longer optional in high-growth tech sectors.

A federal inquiry typically begins quietly, a subpoena for records, a request for internal communications, or a search warrant targeting digital infrastructure.

At that stage, the focus moves from innovation strategy to legal defense strategy.

Companies and individuals facing allegations tied to AI systems often require counsel experienced in navigating complex federal investigations. Matters involving alleged violations of computer fraud statutes, financial crimes, or cross-border data issues frequently demand the expertise of a seasoned Federal Crimes Defense Lawyer who understands both technological systems and federal prosecutorial procedures.

Federal cases differ from state-level matters in scope, investigative resources, and sentencing exposure. Early strategic decisions, whether to cooperate, negotiate, or litigate, can significantly influence outcomes.

Federal sentencing guidelines often factor in:

● Monetary loss amounts

● Number of affected victims

● Sophistication of the scheme

● Use of specialized technical knowledge

● Obstruction or cooperation

In AI-related prosecutions, automation scale may inflate loss calculations dramatically.

A system affecting thousands of users can convert a limited technical misstep into substantial sentencing exposure.

For startups and enterprise AI teams alike, compliance architecture is becoming as important as product architecture.

Key preventative measures include:

● Transparent data sourcing policies

● Clear documentation of access permissions

● Robust monitoring for misuse

● Independent security audits

● Internal legal review before deployment

As regulatory scrutiny intensifies, proactive compliance becomes a competitive advantage rather than a cost center.

Artificial intelligence is not inherently unlawful. It is transformative.

However, transformation at scale amplifies legal consequences when boundaries are crossed.

The federal government has shown increasing willingness to apply existing statutes to emerging technologies, even when those laws were drafted decades before AI systems existed.

For innovators, understanding that enforcement landscape is part of responsible development.

Technology evolves quickly. Federal jurisdiction evolves deliberately, but when it moves, it moves with substantial authority.

Be the first to post comment!