I’ve spent the last several weeks testing Lindy AI, a platform that claims to turn natural-language instructions into automated workflows. I came across it repeatedly on lindy.ai, on Reddit threads, inside product comparison blogs, and even in an Udemy automation course. So I decided to integrate it into my daily workflow, email, Slack, CRM, documents, and admin tasks, to see how well the platform actually performs.

Throughout this review, I’ll share my first-hand observations along with insights from independent users across Trustpilot, G2, Capterra, Reddit, and several editorial sources. My goal here is not to sell anything but to document what Lindy is supposed to do, where it succeeds, and where it falls short based on real-world usage.

Lindy presents itself as an AI-first automation system built around “agents” instead of the traditional trigger-based setups you find in tools like Zapier or Make.com. The platform claims these agents can interpret natural language, understand context, and automate multi-step tasks without requiring flow diagrams.

In my experience, this worked reasonably well for simple communication workflows — especially email replies, Slack notifications, and basic document handling. However, when I attempted workflows involving branching logic or multiple data sources, Lindy occasionally produced inconsistent results or required manual supervision.

This gap between natural-language convenience and deep automation power is a recurring theme across community reviews too.

Lindy claims that its agents can interpret instructions the way a human assistant might — by reading context and deciding what to do rather than executing rigid rules. When I explained tasks like “Summarize customer emails and update my CRM,” Lindy generally understood the intent and executed the steps in sequence.

However, when details became more technical or required advanced reasoning (e.g., conditional steps, multiple integrations firing in a specific order), the AI struggled or misunderstood parts of the workflow. This matches reports on Reddit where users describe Lindy as solid for straightforward routines but unreliable for complex chaining.

The architecture seems optimized for communication-heavy workflows rather than deep logic flows.

To understand where Lindy fits, I compared it against three tools I use regularly: Zapier, Make.com, and RelevanceAI.

Zapier remains the most stable for traditional automation, even though it’s rigid and rule-based. Make.com handles complex workflows and data transformations better than Lindy, and RelevanceAI felt more advanced for multi-agent reasoning and research-oriented tasks.

Lindy sits somewhere in between. It’s supposed to simplify automation by removing flow-building entirely, but that simplicity comes at the cost of occasional unpredictability when workflows become too layered.

For communication tasks, Lindy felt the most natural. For data-heavy automations, the other tools still had an advantage.

Most of my Lindy experimentation happened inside Gmail, Slack, and Google Workspace, all areas where the platform supposedly performs best.

_1763031682.webp)

Lindy automatically drafted replies, filtered newsletters, categorized incoming messages, and created follow-up tasks. Most of this worked as claimed, though certain message threads confused the agent.

Lindy posted automatic updates based on triggers I set. It sometimes identified important messages correctly, though not always consistently.

When updating CRM entries via HubSpot and Notion, Lindy performed adequately for single-step updates. Multi-step updates occasionally lagged or executed partially.

Document extraction, summary generation, and moving files across Google Drive worked fine for simple cases. Advanced document workflows sometimes required manual adjustments.

Overall, Lindy handled communication tasks fairly well, but its reliability dipped when workflows involved more layers or unpredictable inputs.

One of Lindy’s most useful traits is its ability to interpret natural-language instructions. Instead of building flows manually, I simply explained tasks, “Summarize every new lead email and send a Slack update.”

This conversational setup reduced friction substantially. I didn’t need to design multi-step diagrams, and the agent often figured out the sequence automatically.

However, this strength becomes a limitation when you need precise, technical instructions. The lack of traditional rule-building tools means you rely heavily on the AI’s interpretation, which isn’t always reliable.

Lindy promotes multi-agent collaboration, “agent swarms”, as a differentiator. When I tested them, I saw situations where different agents handled different steps in a workflow. This concept theoretically improves parallel execution.

In practice, it worked for lighter workflows, like extracting data and sending a Slack message. For more advanced chains, agents occasionally produced incomplete outputs or misinterpreted handoffs. Reddit users mention similar inconsistency.

Swarm capability exists, but reliability fluctuates depending on workflow complexity.

Lindy’s library of 100+ templates is supposed to make onboarding faster, and in my case, it did. Most templates functioned adequately for basic operations like:

However, some templates behaved unpredictably when dealing with real-world data variations. Several users on Capterra and Trustpilot reported similar behavior.

The templates save time, but they still require testing and adjustment before being trusted for critical operations.

Stability varied considerably depending on workload and task complexity.

I wouldn’t rely on Lindy for mission-critical automation without supervision. It’s helpful for reducing repetitive tasks, but not mature enough for enterprise-level consistency.

Many Reddit users say Lindy feels “Google-first,” and my experience supports this. Nearly everything, Gmail, Google Calendar, Google Drive, Docs, Sheets, performed more smoothly than integrations in Microsoft, Airtable, or external CRMs.

If your workspace operates primarily within Google tools, Lindy integrates quickly and behaves more predictably. If you’re outside that ecosystem, results may vary.

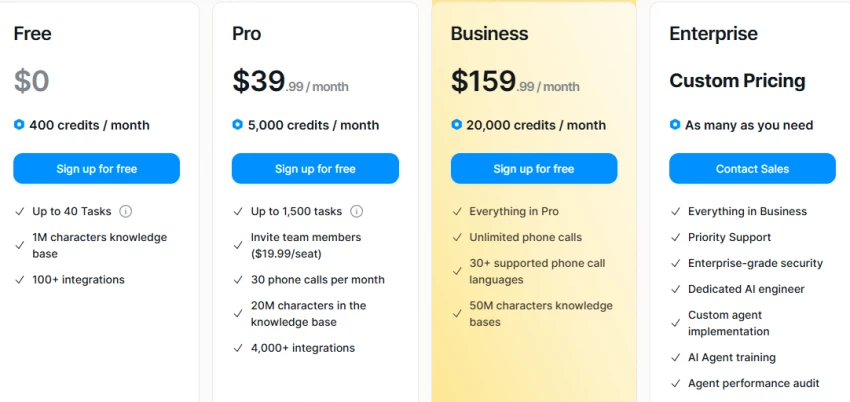

Lindy’s pricing model is credit-based. According to the pricing page, higher plans include more credits, but usage varies drastically depending on workflow complexity.

Based on my tests:

Trustpilot reviewers have also reported feeling that credits burned faster than expected. A few even mentioned discrepancies between advertised and delivered credits, something the company appears to respond to but hasn’t fully resolved.

To avoid bias, I studied user sentiment across multiple platforms:

Users mention strong workflow improvements but raise concerns about support delays and credit issues.

Most reviewers describe time savings and smooth scaling for teams.

Users appreciate the ROI but mention that pricing becomes steep for heavier automation.

Feedback generally warns that Lindy works best for Gmail-heavy routines and struggles with complex logic.

Across all platforms, Lindy’s sentiment is moderately positive but accompanied by consistent warnings about reliability and pricing.

Lindy claims to manage advanced automations, but in my experience, anything beyond 3–4 interconnected steps became unstable. Multi-file logic, conditional data extraction, long chains of events, or tasks requiring contextual memory often broke or produced incomplete results.

Users in blogs, like the Gmelius review and Substack commentary, describe similar limits, strong for simple or medium workflows, inconsistent for advanced automation.

Based on this, I’d use Lindy for everyday automation, but not for multi-layered operations that demand consistently accurate logic.

Support was present, but slower than I expected. I saw similar patterns in Trustpilot reviews where the team replies to negative reviews but sometimes takes days or weeks to resolve deeper workflow issues.

Lindy is still evolving as a platform, and support responsiveness seems to reflect that maturity stage.

Lindy offers value if your day revolves around:

It handles these reasonably well.

However, if your needs involve:

Lindy may not be the best long-term match.

I’ve kept it for communication workflows because it reduces friction, but I still rely on Make.com or RelevanceAI for deeper automation.

Lindy appears to be a promising automation assistant for small teams, founders, and professionals who want fast relief from repetitive communication tasks. It’s simple, accessible, and faster to set up than many competing tools. However, it still suffers from reliability gaps, pricing friction, and limitations in advanced automation.

It works well, until workflows become too technical.

It saves time, until you try to scale its logic.

It’s helpful, but not yet enterprise-stable.

In short: Lindy is useful, but still maturing. I’ll continue using it, but selectively.

Be the first to post comment!