When I first noticed Muke AI trending across various AI tool directories and niche review platforms, I wasn’t sure whether it was just another experimental tool or something genuinely useful. But as someone who works closely with AI systems, privacy frameworks, and digital ethics, I knew I had to test it firsthand before forming any conclusions.

The experience turned out to be revealing, and in many ways, concerning.

Before using any AI tool that handles human images, I ask myself:

With Muke AI, almost all of these questions were unanswered. The gaps were significant enough that I decided to thoroughly explore the tool, not as a casual experiment but as a full evaluation.

The homepage feels intentionally straightforward. Uploading an image is quick. Rendering is fast. The results look AI-generated, although not on par with industry-leading models.

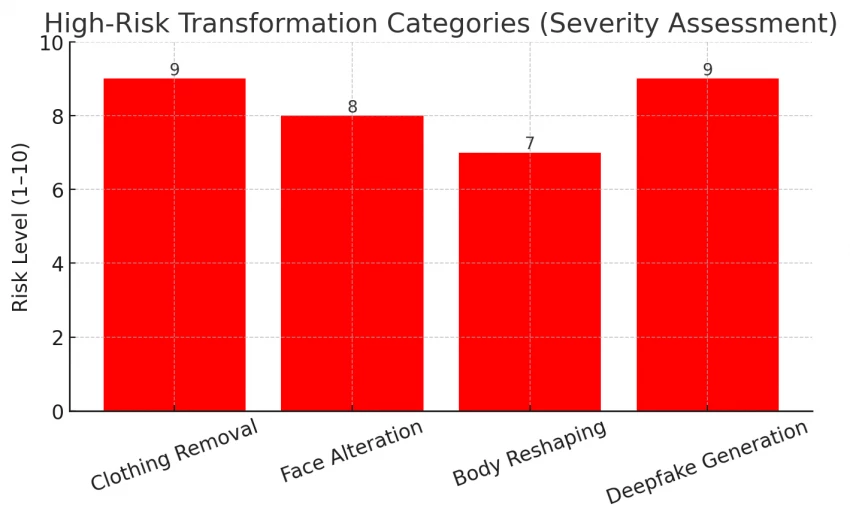

But what struck me immediately were the types of transformations offered, some innocent, others highly questionable. This is where my uncertainty turned into genuine concern.

Seeing these capabilities firsthand made me think more about intent, responsibility, and potential misuse.

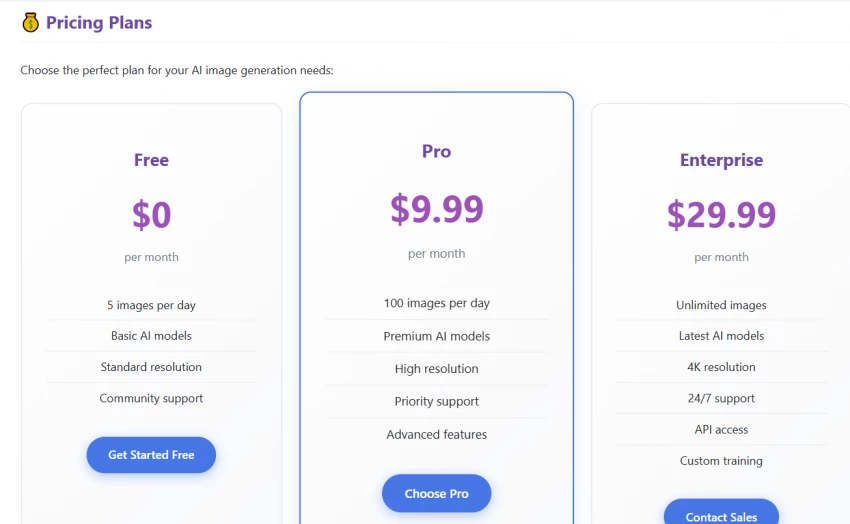

At first, Muke AI’s pricing seems fairly competitive. But when I tried using the service, it was hard to find straightforward information about billing limits, how user data is handled, or what their refund policy covers. A lot of the key details felt unclear, hidden, or not explained at all.

That impression also lines up with what I found in online reviews while pricing is listed, the platform doesn’t do a great job of being transparent about the finer points.

I searched everywhere, LinkedIn, company registries, WHOIS records, and found:

Most reputable AI companies don’t hide their ownership.

Muke AI does. Completely.

Without knowing who operates the platform, there is no way to:

This alone raises legitimacy concerns.

The biggest issue I encountered is the complete lack of clarity around:

Given the sensitive nature of facial data, this is unacceptable.

As I continued reviewing, I realized Muke AI fails to provide:

This is not just a lack of information, it's a transparency vacuum.

Because of the features available, I could see how easily someone could:

The tool lacks:

This makes Muke AI extremely vulnerable to misuse.

From my assessment:

If a platform operates without legal or industry compliance, the risk falls entirely on users.

When I compare it to platforms like:

The contrast is stark.

These reputable tools offer:

Muke AI offers none of these.

After testing it, I reviewed public feedback. Users often mention:

Everything I saw aligned with what I experienced myself.

After evaluating all aspects, here’s my conclusion:

If Someone Insists on Trying Muke AI: My Practical Safety Advice

If a person still wants to test it:

This is the minimum viable safety approach.

After hands-on testing, research, and evaluation, I believe:

Muke AI is not transparent, not accountable, not safe for personal use, and not aligned with responsible AI practices.

Until it provides:

…it remains a platform I cannot trust.

Be the first to post comment!