Technology

5 min read

Undress AI: Impact and How to Save Yourself

Undress AI deepfake tool explained—features, dangers, privacy risks, legal concerns, and protection tips to stay safe from image manipulation.

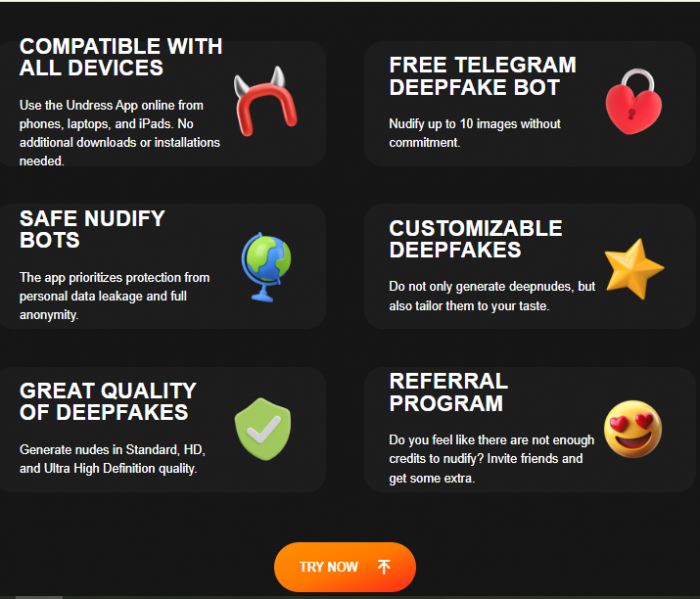

Undress AI is a deepfake technology tool that uses machine learning to remove clothing from images, creating non-consensual explicit photos. While some may argue it demonstrates the technical progress of Generative Adversarial Networks (GANs), its real impact is far from positive.

This isn’t just another “AI experiment”—it represents one of the darker sides of artificial intelligence. By producing manipulated, fake nude images, it raises critical ethical, legal, and psychological risks for victims.

The short answer: No.

Even if the platform promotes privacy safeguards, its core function—creating fake explicit images without consent—makes it fundamentally unsafe. Many countries classify this as illegal deepfake pornography, punishable by fines or imprisonment.

To prevent the misuse of AI-powered deepfake tools, individuals and organizations should take proactive steps:

1. Public Awareness

◦ Educate people about the dangers of deepfake tools and their legal consequences.

2. Strict Cyber Laws

◦ Governments should enforce strict regulations to control the development and use of such tools.

3. AI Detection Systems

◦ Developers can create AI software to detect and prevent deepfake content.

4. Report Misuse

◦ Victims should report unauthorized deepfake images to legal authorities and online platforms.

5. Avoid Using Harmful AI Tools

◦ Ethical AI usage should be promoted, discouraging the development of harmful technologies.

Pros

Cons

No one should use it for unethical or illegal purposes. However, specific professionals may study the technology to improve online safety:

Undress AI is not an innovation to celebrate—it’s a warning sign. While deep learning fuels progress in healthcare, education, and finance, its misuse here proves why AI ethics and regulation are urgent.

Just as financial security requires safe tools like encrypted prepaid systems, digital dignity demands protection against deepfake exploitation. We must:

In the digital era, protecting human rights and privacy is as critical as advancing technology.

1. Is Undress AI legal to use?

No, in most countries using Undress AI for non-consensual images is illegal. It can lead to criminal charges, lawsuits, and heavy fines.

2. Can Undress AI be used for ethical purposes?

Outside of cybersecurity research and AI detection training, Undress AI has no ethical consumer use. Its core functionality poses serious risks.

3. How can I tell if an image was created with Undress AI?

You can use AI deepfake detection tools or look for digital artifacts in lighting, edges, and skin texture. Researchers are building stronger detection systems daily.

4. What should I do if someone shares a fake image of me online?

Immediately report the content to the platform, file a police complaint, and consider legal support. Document everything with screenshots as evidence.

5. Can VPNs or anonymous accounts protect Undress AI users from law enforcement?

No. Even if disguised, uploads often leave digital footprints. Authorities can track activity through payment records, IP logs, and cloud servers.

6. Are there safer alternatives to Undress AI for photo editing?

Yes, plenty. Ethical AI tools like Adobe Firefly or Runway ML allow creative editing without compromising privacy.

7. Why does Google show Undress AI in search results if it’s harmful?

Search engines index content automatically, but harmful tools often get demoted or de-indexed over time. Reporting such content can accelerate removal.

8. How can organizations fight against Undress AI misuse?

They can implement awareness campaigns, legal actions, AI-driven detection systems, and partnerships with law enforcement to curb abuse.

Be the first to post comment!