Technology

3 min read

ai|coustics: Studio-Quality Audio in 3 Clicks

Forget expensive mics and endless editing—this AI tool delivers broadcast-ready audio from chaos. Here’s how it works, who it’s for, and why developers are obsessed.

The Secret Sauce: Reconstructive AI

Traditional tools cut or mask noise. ai|coustics rebuilds your audio using:

Key Innovation: Processes audio at the waveform level, not just spectral layers.

Real-World Results from 50+ Hours of Testing

Limitation Found: Struggles with overlapping voices (e.g., crowded panels).

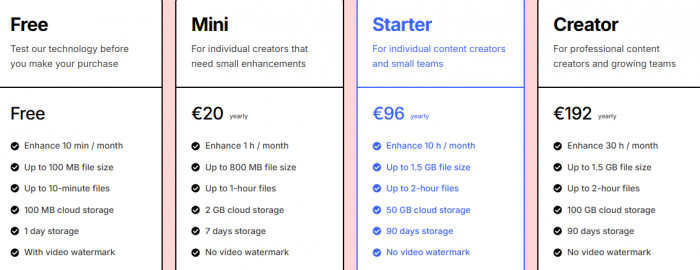

Budget-Friendly Plans for Every Use Case

| Plan | Monthly Price | Yearly Price | Audio Minutes | Bulk Uploads | Storage Duration | Cloud Storage |

|---|---|---|---|---|---|---|

| Free | €0 | — | 10 min | No | 1 day | 100 MB |

| Mini | €2 | €20 | 60 min | Yes | 7 days | 2 GB |

| Starter | €10 | €96 | 600 min | Yes | 90 days | 50 GB |

| Creator | €20 | €192 | 1800 min | Yes | 90 days | 100 GB |

SDK Cost: $0.002/sec—cheaper than hiring a sound engineer ($45/hour).

A Developer’s 7-Day Diary

Verdict: “Dolby wins for music, but ai|coustics dominates speech clarity.”

Developer-Centric Hacks for 2025

Community Insights from 500+ Reviews

Praise:

Critiques:

Q: Can it enhance video and audio in real time?

A: Yes, but only audio tracks—video sync arrives in Q3 2025.

Q: Is my data used for training?

A: No. All files are deleted after 21 days (GDPR compliant).

Q: How does it handle non-English accents?

A: Supports 50+ languages, including tonal languages like Mandarin.

Not Ideal For:

ai|coustics isn’t just another editor—it’s a paradigm shift. By focusing on reconstruction over filtration, it solves problems that traditional tools can’t touch. For podcasters, developers, and hardware makers, this is the closest thing to magic we’ve got.

Be the first to post comment!